LLMs have been creeping into our lives relentlessly since the emergence and then the prevalence of the flagship model ChatGPT over the entire human Internet. However, while everyone is really just trying out, or even starting to rely on LLMs to make an easier and more enjoyable life, it should be noted that LLMs are still, essentially, a game of capital and mode of production in the name of the pursuit of knowledge and truth.

# The battle of model size

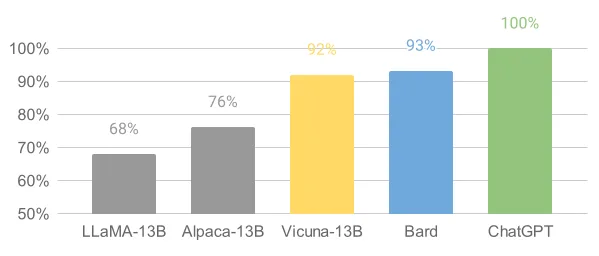

It is almost obvious that extra large language models would perform in most of the tasks better than language models in smaller form factors, such as ChatGLM, Alpaca, Llama, etc., and especially when it comes to logically intense question and answers. Under such assumptions, there is simply no reason for individuals to deploy and use smaller-scale LLMs that would almost definitively perform worse than the flagship models that was made readily and freely available online, such as ChatGPT.

This is not only the alarm bell but the pending death sentence to all the medium-sized models whose sole purpose is to take on flagship models such as ChatGPT - no potential users whatsoever.

Moreover, the future of such medium-sized models are constrained by the limiting terms of service of often their most important data source, that is, ChatGPT. As most of the well-performing medium-sized models rely heavily on data generated by services affiliated with OpenAI, such as Alpaca, Vicuna and Koala. And not to our surprise, even Google Bard was allegedly distilling ChatGPT data to improve their performance.

The deadliest blow comes in the absence of a comprehensive method to evaluate how good the LLMs are actually performing, leaving small-scale LLMs no way to prove their worth. The current evaluation efforts are limited to scores given by GPT-4 (as in Vicuna), human evaluation over a limited set of questions (as in Koala), and the evaluation of the models against traditional NLP test sets. None of these methods are reliable or convincing enough to be considered golden rules to pick the best model. As such, the amount of exposure a model can get decides its rise and fall, leaving small-scale LLMs no chance against established brands starring OpenAI and Microsoft.

The development of LLMs is helplessly sliding into the slope of monopoly. Credible competitors will be scarce. Eventually smaller scale LLMs are going to be only relavent in select use cases such as offline deployment in private firms, or mobile deployment in laptops and phones, where the full-scale LLMs are not available, or where the horsepower of a state-of-the-art LLM is simply not necessary.

# The dominance of capital

OpenAI CEO Sam Altman said in his interview on 13rd, April that the size of LLMs won’t matter as much moving forward. The remarks can be roughly perceived in two different ways.

First, if what he said was based on facts discovered in experiments conducted inside of OpenAI, which is the only possible entity on the earth that has the capability to conduct such research, OpenAI will still be the one and only firm with the capital and access to fine-tune the largest and most performant models in the world, which they own. And that automatically implies unfair competitions to come.

Second, if the signal was just a camouflage, and increasing the size of the model does still give performance boosts. Then it is essentially telling the other competitors to back down from the war of model size and instead research on fine-tuning, which OpenAI can easily snatch and copy whenever the research is published, while they can still work on increasing the quantity and quality of their training data.

In either case, OpenAI wins and the monopoly prevails.

# Implications and Thoughts

According to an anonymous souce (Xiaoyi Ma), the gap of artificial intelligence technologies between states would only expand in the years to come. And it will be partly because of the fact that the Big NLPs control the biggest data, the biggest infrastructure, and biggest capital, which would only attract for them even more investments and human resources.

What is even more alarming is that the development of open-source medium-sized models are very likely going to be suppressed by the success of the flagship models, given the lack of proper evaluation methods and adequate public exposure, which would ultimately reflect in the slow replication and assimilation of such technologies among the other states.

The introduction of accelerated training frameworks like DeepSpeed might mitigate the uncrossable gap between medium-sized and large-sized models. However, the lack of open data and the fact that the highest quality data comes from ChatGPT still make me wonder if the monopoly can ever be lifted.